AWS S3 Glacier Deep Archive Cost computation

There are a bunch of online articles/reddit posts that explain pricing, but I’ll redo the computation here, based on S3 calculator and my understanding.

Update (July 15, 2024): Increased recommended average size from 128MB to 256 MB, taking into account multipart upload cost.

First, you need to chose your zone carefully. us-east-1 (N. Virginia)

seems to be cheapest. I do not care

too much about locality as I only plan to backup and restore fairly

infrequently.

The cost of storage, backup, and restore operations depends a lot on the average file size. I found that keeping the average size above 256 MB is a good tradeoff (lower overhead cost, while allowing finer grain restore operations).

It goes without saying this is provided without guarantee, please double check my numbers.

Storage cost

To quote the pricing page:

For each object that is stored in the […] S3 Glacier Deep Archive storage classes, AWS charges for 40 KB of for index and metadata with 8 KB charged at S3 Standard rates and 32 KB charged at […] S3 Deep Archive rates.

For example, to store 1 TB, with an average file size of 1 MB, the storage cost per month will look like this:

- Number of files: 1 TB / 1 MB = 1048576

- Actual S3 Glacier Deep data: 1 TB * $0.00099 / GB = $1.01376

- S3 Glacier Deep overhead: 1048576 * 32 KB * $0.00099 / GB = $0.03168

- S3 Standard overhead: 1048576 * 8 KB * $0.023 / GB = 0.184$

- Total cost: $1.23 / TB / month

From around 128MB, the overhead cost becomes totally negligible.

Backup cost (upload)

Backing up the data also comes at a cost, due to the cost of PUT

operations. As usual with cloud providers, ingress bandwidth is free.

For example, for 1 TB of 128 MB files:

- Number of files: 1 TB / 128 MB = 8192.

- Operation cost: 8192 * $0.05 / 1000 requests = $0.41 / TB

- Bandwidth cost: 1 TB * $0 / GB = $0

- Total cost: $0.41 / TB

This is where using big files saves a lot. 1 MB files would cost a whopping $52.43/TB to upload.

Multipart upload (added July 15, 2024)

There’s one more thing to consider. To speed up the upload process, it is common to upload using multiple connections and upload files in chunks.

Multipart upload is documented here. We can ignore the cost of storing multipart parts during the operations (that would be short), but the operations cost is still significant:

Both CreateMultipartUpload and UploadPart are billed at S3 Standard rates […] with only the CompleteMultipartUpload request charged at S3 Glacier > Deep Archive rates.

The default boto3 transfer configuration will upload the data in chunks of 8 MB. In this case (1 TB of 128MB files again):

- Number of files: 1 TB / 128 MB = 8192.

- Number of chunks: 1TB / 8 MB = 131072

- Operations: 8192 * $0.005 / 1000 = $0.041 / TB (CreateMultipartUpload, 1/file)

- Operations: 131072 * $0.005 / 1000 = $0.66 / TB (UploadPart, 1/chunk)

- Operations: 8192 * $0.05 / 1000 = $0.41 / TB (CompleteMultipartUpload, 1/file)

- Bandwidth: 1 TB * $0 / GB = $0

- Total cost = $1.11 / TB

This is a significantly increased cost.

If I want to stay near the default number of connection (10), I need at least 8 chunks per file:

- 128 MB files, 16MB chunks: $0.78 / TB

- 256 MB files, 32MB chunks: $0.39 / TB

- 512 MB files, 64MB chunks: $0.19 / TB

- 1 GB files, 64MB chunks: $0.097 / TB

I think 256MB is a good tradeoff (less than half a month of storage cost to upload the data).

Restore cost (download)

Restoring the data also cost money. The first step will be to retrieve the object from “Deep Glacier” to “Standard”.

There are 2 retrieval options: Standard and Bulk. Standard is much more expensive (~10x), but faster (12h vs 48h)

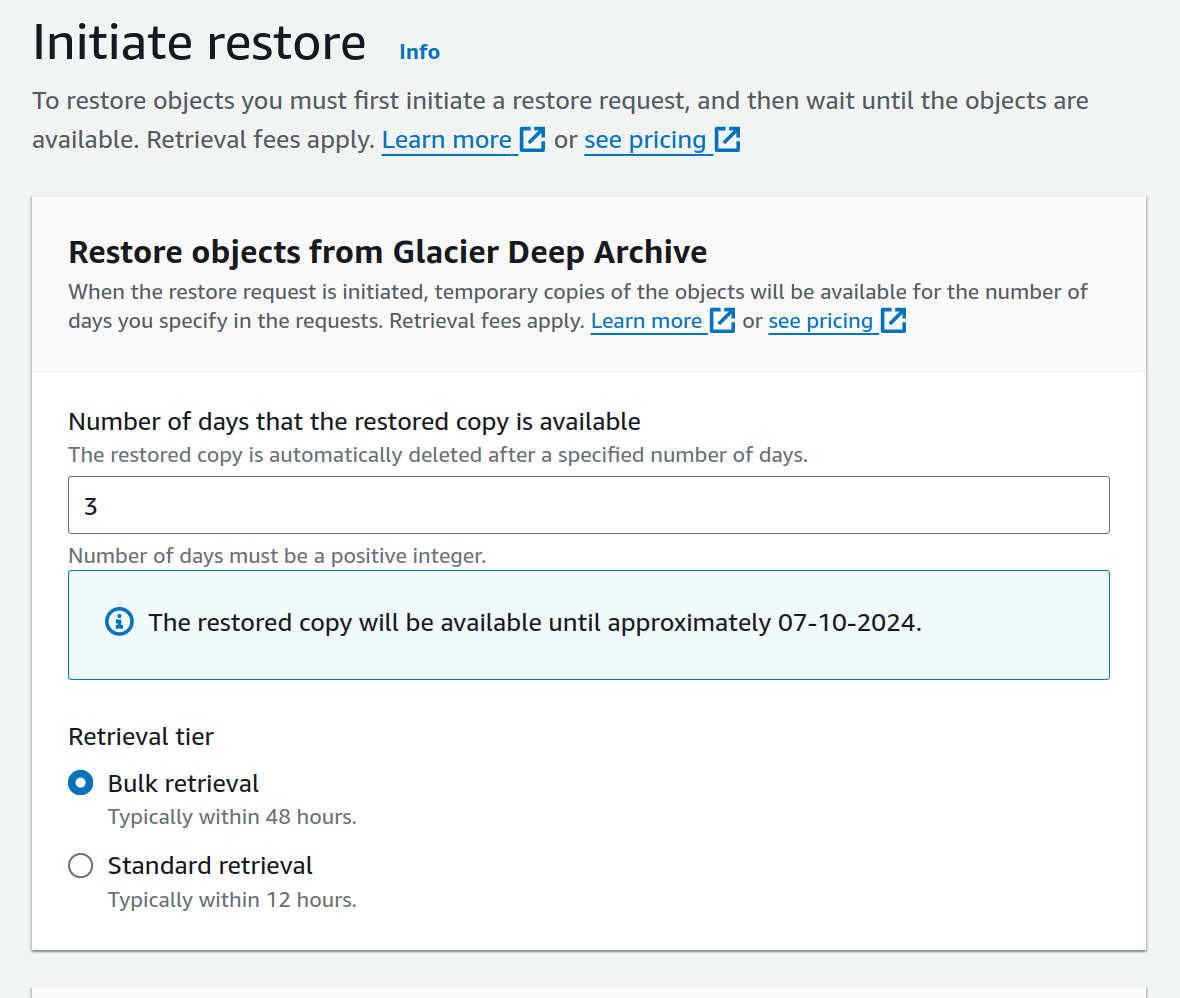

The web UI (and also the API) allows you to select how many days to keep the data in Standard storage, which you will be charged for. Of course, you need to set a long enough number of days so that you have time to download the data.

AWS Web UI restore interface

AWS Web UI restore interface

Also, egress bandwidth is charged, but the first 100GB/month are free, so if you only restore a small amount of data, or if you willing to wait many months, costs can be limited.

For example, for 1 TB of 128 MB files:

- Number of files: 1 TB / 128 MB = 8192.

- Retrieval cost/operation: 8192 * $0.025 / 1000 requests = $0.20 / TB

- Retrieval cost/GB: 1 TB * $0.0025 / GB = $2.56 / TB

- S3 standard storage cost (7 days): 1 TB * $0.023 / GB * 7/30 = $5.50 / TB

- Bandwidth cost: 1 TB * $0.09 / GB = $92.16

- Total cost: $100.42 / TB

- Total cost for the first 100 GB: $0.81 / 100 GB (no bandwidth cost)

Here, the cost is heavily dominated by the egress bandwidth cost, if one can keep under the 100GB / month bandwidth, the price becomes a lot more interesting.

Standard restore is about 4 times pricier if bandwidth does not need to be paid for. If not, it’s only a smaller 20% price increase.

Assuming no bandwidth cost, restoring a single 128MB file costs 0.001$ with Bulk restore, and 0.004$ with Standard restore: if only a few files need to be restored the costs are negligible anyway and there is no reason to use the slower Bulk restore.

Calculator

Again, it goes without saying this is provided without guarantee, please double check my numbers.

- Average file size: MB

- Multipart upload chunk size: MB

- Cost (prefilled with us-east-1, N. Virgnia, as of July 2024)

- S3 Standard: $/GB/month

- S3 Glacier Deep Archive: $/GB/month

- PUT, COPY, POST, LIST requests (Standard): $/1000 operations

- PUT, COPY, POST, LIST requests (Deep Archive): $/1000 operations

- Retrieval (Deep Archive, Bulk): $/1000 operations

- Retrieval (Deep Archive, Bulk): $/GB

- Retrieval (Deep Archive, Standard): $/1000 operations

- Retrieval (Deep Archive, Standard): $/GB

- Bandwidth: $/GB

- Free bandwidth: GB / month

(changing price also updates graphs above)

__CALCULATOR_OUTPUT__